Deploy Kubernetes Cluster on Ubuntu 20.04 with Containerd

When building cloud infrastructure, it’s crucial to keep scalability, automation, and availability top of mind.

In the past, it was really difficult to uphold high standards of scalability, automation, and availability in the cloud. Only the top IT companies could do it. But today, courtesy of Kubernetes, anyone can build a self-sufficient, auto-healing, and auto-scaling cloud infrastructure.

All it takes is a few YAML files.

In this article, we’ll go through all the steps you need to set up a Kubernetes cluster on two Ubuntu 20.04 machines.

What is Kubernetes?

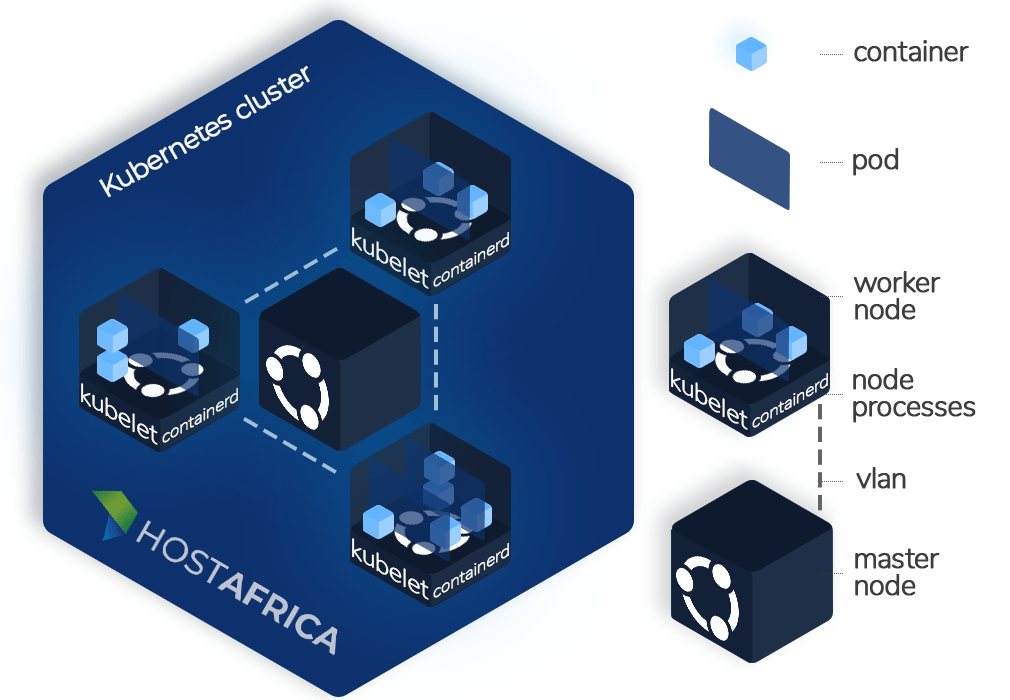

Kubernetes is open-source software that allows you to run application pods inside a cluster of master and worker nodes. A cluster must at least have 1 master and 1 worker node. A pod is simply a group of containers.

The master node is responsible for managing the cluster and ensuring that the desired state (defined in the YAML configuration files) is always maintained.

If the master node detects that a pod/node has gone down, it restarts it. If it detects a substantial rise in traffic, it auto-scales the cluster by spawning new pods.

The master node is accompanied by worker node(s). These run the application containers. Each Kubernetes node has the following components:

- Kube-proxy: A network proxy that allows pods to communicate.

- Kubelet: It’s responsible for starting the pods, and maintaining their state and lifetime.

- Container runtime: A package that creates containers, and allows them to interact with the operating system. Docker used to be the primary container runtime until the Kubernetes team deprecated it in v1.20.

As of v1.24, support for Docker has been removed from the Kubernetes source code. The recommended alternatives are containerd and CRI-O.

However, you can still set Docker up using cri-dockerd, open-source software that lets you integrate Docker with the Kubernetes Runtime Interface (CRI).

In this article, we will be using containerd as our runtime. Let’s begin!

Step 1. Install containerd

To install containerd, follow these steps on both VMs:

- Load the

br_netfiltermodule required for networking.sudo modprobe overlay sudo modprobe br_netfilter cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf overlay br_netfilter EOF

- To allow

iptablesto see bridged traffic, as required by Kubernetes, we need to set the values of certain fields to1.sudo tee /etc/sysctl.d/kubernetes.conf<<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF

- Apply the new settings without restarting.

sudo sysctl –system

- Install curl.

sudo apt install curl -y

- Get the

apt-keyand then add the repository from which we will install containerd.curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

Expect an output similar to the following:

Get:1 https://download.docker.com/linux/ubuntu focal InRelease [57.7 kB] Get:2 https://download.docker.com/linux/ubuntu focal/stable amd64 Packages [17.6 kB] Get:3 http://security.ubuntu.com/ubuntu focal-security InRelease [114 kB] Hit:4 http://archive.ubuntu.com/ubuntu focal InRelease Get:5 http://archive.ubuntu.com/ubuntu focal-updates InRelease [114 kB] Get:6 http://archive.ubuntu.com/ubuntu focal-backports InRelease [108 kB] Fetched 411 kB in 2s (245 kB/s) Reading package lists... Done

- Update and then install the containerd package.

sudo apt update -y sudo apt install -y containerd.io

- Set up the default configuration file.

sudo mkdir -p /etc/containerd sudo containerd config default | sudo tee /etc/containerd/config.toml

- Next up, we need to modify the containerd configuration file and ensure that the

cgroupDriveris set tosystemd. To do so, edit the following file:sudo vi /etc/containerd/config.toml

Scroll down to the following section:

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

And ensure that value of

SystemdCgroupis set totrueMake sure the contents of your section match the following:[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] BinaryName = "" CriuImagePath = "" CriuPath = "" CriuWorkPath = "" IoGid = 0 IoUid = 0 NoNewKeyring = false NoPivotRoot = false Root = "" ShimCgroup = "" SystemdCgroup = true - Finally, to apply these changes, we need to restart containerd.

sudo systemctl restart containerd

To check that containerd is indeed running, use this command:

ps -ef | grep containerd

Expect output similar to this:

root 63087 1 0 13:16 ? 00:00:00 /usr/bin/containerd

Step 2. Install Kubernetes

With our container runtime installed and configured, we are ready to install Kubernetes.

- Add the repository key and the repository.

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add - echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

- Update your system and install the 3 Kubernetes modules.

sudo apt update -y sudo apt install -y kubelet kubeadm kubectl

- Set the appropriate hostname for each machine.

sudo hostnamectl set-hostname "master-node" exec bash

And on the worker node:

sudo hostnamectl set-hostname "worker-node" exec bash

- Add the new hostnames to the

/etc/hostsfile on both servers.sudo vi /etc/hosts 160.129.148.40 master-node 153.139.228.122 node1 worker-node

Remember to replace the IPs with those of your systems.

- Set up the firewall by installing the following rules on the master node:

sudo ufw allow 6443/tcp sudo ufw allow 2379/tcp sudo ufw allow 2380/tcp sudo ufw allow 10250/tcp sudo ufw allow 10251/tcp sudo ufw allow 10252/tcp sudo ufw allow 10255/tcp sudo ufw reload

- And these rules on the worker node:

sudo ufw allow 10251/tcp sudo ufw allow 10255/tcp sudo ufw reload

- To allow kubelet to work properly, we need to disable swap on both machines.

sudo swapoff –a sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

- Finally, enable the kubelet service on both systems so we can start it.

sudo systemctl enable kubelet

Step 3. Setting up the cluster

With our container runtime and Kubernetes modules installed, we are ready to initialize our Kubernetes cluster.

- Run the following command on the master node to allow Kubernetes to fetch the required images before cluster initialization:

sudo kubeadm config images pull

- Initialize the cluster

sudo kubeadm init --pod-network-cidr=10.244.0.0/16

The initialization may take a few moments to finish. Expect an output similar to the following:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster. Run

kubectl apply -f [podnetwork].yamlwith one of the options listed at Kubernetes.Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 102.130.122.60:6443 --token s3v1c6.dgufsxikpbn9kflf \ --discovery-token-ca-cert-hash sha256:b8c63b1aba43ba228c9eb63133df81632a07dc780a92ae2bc5ae101ada623e00You will see a kubeadm join at the end of the output. Copy and save it in some file. We will have to run this command on the worker node to allow it to join the cluster. But fear not, if you forget to save it, or misplace it, you can also regenerate it using this command:

sudo kubeadm token create --print-join-command

- Now create a folder to house the Kubernetes configuration in the home directory. We also need to set up the required permissions for the directory, and export the KUBECONFIG variable.

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config export KUBECONFIG=/etc/kubernetes/admin.conf

- Deploy a pod network to our cluster. This is required to interconnect the different Kubernetes components.

kubectl apply -f https://github.com/coreos/flannel/raw/master/Documentation/kube-flannel.yml

Expect an output like this:

podsecuritypolicy.policy/psp.flannel.unprivileged created clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.apps/kube-flannel-ds created

- Use the

get nodescommand to verify that our master node is ready.kubectl get nodes

Expect the following output:

NAME STATUS ROLES AGE VERSION master-node Ready control-plane 9m50s v1.24.2

- Also check whether all the default pods are running:

kubectl get pods --all-namespaces

You should get an output like this:

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-6d4b75cb6d-dxhvf 1/1 Running 0 10m kube-system coredns-6d4b75cb6d-nkmj4 1/1 Running 0 10m kube-system etcd-master-node 1/1 Running 0 11m kube-system kube-apiserver-master-node 1/1 Running 0 11m kube-system kube-controller-manager-master-node 1/1 Running 0 11m kube-system kube-flannel-ds-jxbvx 1/1 Running 0 6m35s kube-system kube-proxy-mhfqh 1/1 Running 0 10m kube-system kube-scheduler-master-node 1/1 Running 0 11m

- We are now ready to move to the worker node. Execute the kubeadm join from step 2 on the worker node. You should see an output similar to the following:

This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details.

Run

kubectl get nodeson the control-plane to see this node join the cluster. - If you go back to the master node, you should now be able to see the worker node in the output of the

get nodescommand.kubectl get nodes

And the output should look as follows:

NAME STATUS ROLES AGE VERSION master-node Ready control-plane,master 18m40s v1.24.2 worker-node Ready <none> 3m2s v1.24.2

- Finally, to set the proper role for the worker node, run this command on the master node:

kubectl label node worker-node node-role.kubernetes.io/worker=worker

To verify that the role was set:

kubectl get nodes

The output should show the worker node’s role as follows:

NAME STATUS ROLES AGE VERSION master-node Ready control-plane,master 5m12s v1.24.1 worker-node Ready worker 2m55s v1.24.1

That’s it! We have successfully set up a two-node Kubernetes cluster!