Deploy Kubernetes Cluster on Debian 11 with Containerd

Kubernetes has become the go-to platform to build, manage, and scale IT infrastructures.

Using a few YAML configuration files, you can create a self-sufficient cluster with application pods distributed across various nodes. Kubernetes ensures that pods restart in case they crash, scale up in times of high traffic, and migrate to a different node, if the original one goes down.

In this article, we’ll walk you through the steps to deploy a Kubernetes cluster on two Debian 11 machines using Containerd.

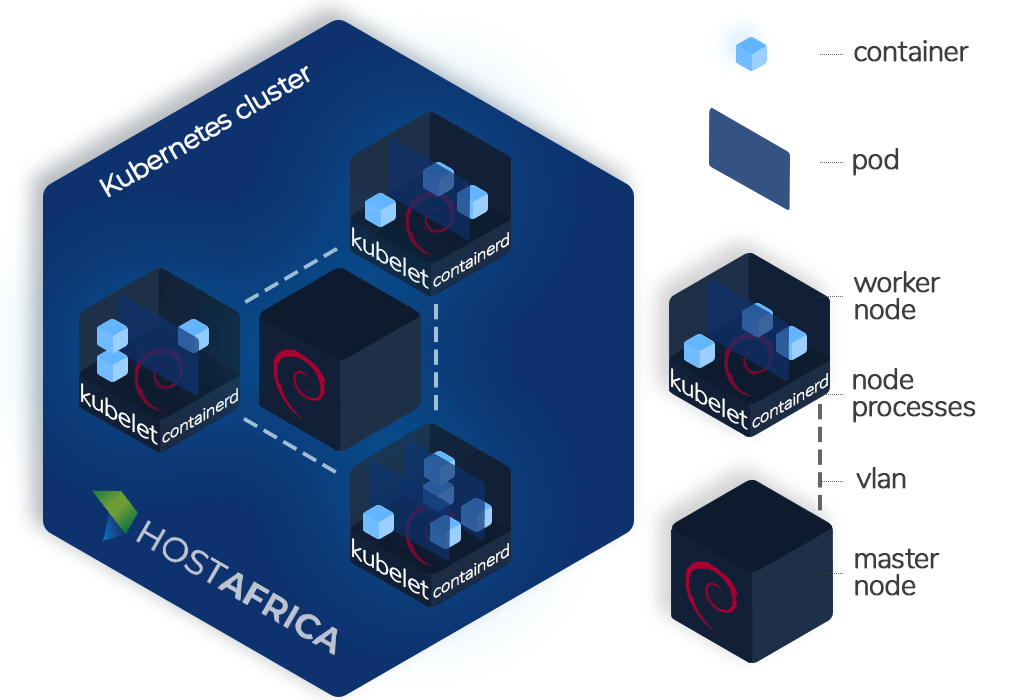

What is a Kubernetes cluster?

A Kubernetes cluster comprises a master node, and at-least one or more worker nodes.

The master node is the virtual machine (VM) that oversees the administration and management of the entire cluster. It’s responsible to distribute workload across different workers nodes.

The worker nodes are VMs that actually host your containerized applications. It’s recommended to only use VMs (aka cloud servers) to run a Kubernetes cluster, and not system containers (aka VPS), as the latter may pose scalability issues.

Every worker node is made up of:

- Kubelet: The fundamental node agent which registers a node with the Kube-apiserver running on the master node.

- Kube-proxy: A network proxy that maintains and implements rules for network communication, inside and outside of the cluster.

- Container runtime: This is the software responsible for running containers. For a long time, Docker remained the container runtime of choice. However, it was deprecated in version 1.20, and is set to be removed in version 1.24. This is why we will be using Containerd as our runtime in this guide.

This doesn’t mean that your container images built with Docker will no longer run inside a Kubernetes cluster.

Containers are lightweight software packages that contain all the dependencies required to run an application in an isolated environment; nothing more, nothing less.

The most popular containerization platform is Docker. Kubernetes creates groups of containers, known as pods, which are its most fundamental building blocks. Pods can communicate with other pods, the kube-apiserver, and with the outside world.

To set up a Kubernetes cluster, you will have to install three code modules (and all their dependencies): Kubelet, kubeadm, and kubectl, on every node. But before we do that, we will install Containerd (our container runtime), on all the nodes.

For the purpose of this article, we will create a Kubernetes cluster with 2 Debian 11 nodes: 1 master, 1 worker. Let’s begin!

Step 1. Install Containerd

Execute these commands on all the nodes to install Containerd:

Install and configure prerequisites

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sudo sysctl --system

Install Containerd

sudo apt-get update

sudo apt-get -y install containerd

Successful installation should yield the following output:

Reading package lists… Done

Building dependency tree… Done

Reading state information… Done

The following additional packages will be installed:

runc

Suggested packages:

containernetworking-plugins

Recommended packages:

criu

The following NEW packages will be installed:

containerd runc

0 upgraded, 2 newly installed, 0 to remove and 23 not upgraded.

Need to get 20.9 MB/23.3 MB of archives.

After this operation, 96.2 MB of additional disk space will be used.

Get:1 http://deb.debian.org/debian bullseye/main amd64 containerd amd64 1.4.12~ds1-1~deb11u1 [20.9 MB]

Fetched 20.9 MB in 3s (7,397 kB/s)

Selecting previously unselected package runc.

(Reading database … 29290 files and directories currently installed.)

Preparing to unpack …/runc_1.0.0~rc93+ds1-5+b2_amd64.deb …

Unpacking runc (1.0.0~rc93+ds1-5+b2) …

Selecting previously unselected package containerd.

Preparing to unpack …/containerd_1.4.12~ds1-1~deb11u1_amd64.deb …

Unpacking containerd (1.4.12~ds1-1~deb11u1) …

Setting up runc (1.0.0~rc93+ds1-5+b2) …

Setting up containerd (1.4.12~ds1-1~deb11u1) …

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /lib/systemd/system/containerd.service.

Processing triggers for man-db (2.9.4-2) …

Set the configuration file for Containerd:

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

Set cgroup driver to systemd

To set the cgroup driver to systemd, edit the configuration file:

vi /etc/containerd/config.toml

Find the following section: [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

And set the value of SystemdCgroup to true

This is how your file should look after you are done:

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes]

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

runtime_type = "io.containerd.runc.v2"

runtime_engine = ""

runtime_root = ""

privileged_without_host_devices = false

base_runtime_spec = ""

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true

Restart Containerd

Finally, restart Containerd to apply the configuration;

sudo systemctl restart containerd

To verify that Containerd is running, execute this command:

ps –ef | grep containerd

You should get an output like this:

root 16953 1 1 10:39 ? 00:00:00 /usr/bin/containerd

root 16968 16308 0 10:39 pts/0 00:00:00 grep containerd

Step 2. Install Kubernetes

Now that Containerd is installed on both our nodes, we can start our Kubernetes installation.

Install curl on both nodes

sudo apt-get install curl

Add Kubernetes key on both nodes

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add

Add Kube repository on both nodes

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

Update both your systems, and install all the Kubernetes modules

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

Set hostnames

On the master node, run:

sudo hostnamectl set-hostname "master-node"

exec bash

On the worker node, run:

sudo hostnamectl set-hostname "w-node1"

exec bash

Set the hostnames in the /etc/hosts file of the worker:

sudo cat <> /etc/hosts

160.119.248.60 master-node

160.119.248.162 node1 W-node1

EOF

Config firewall rules

Set up the following firewall rules on the master node

sudo ufw allow 6443/tcp

sudo ufw allow 2379/tcp

sudo ufw allow 2380/tcp

sudo ufw allow 10250/tcp

sudo ufw allow 10251/tcp

sudo ufw allow 10252/tcp

sudo ufw allow 10255/tcp

sudo ufw reload

Set up the following firewall rules on the worker node

sudo ufw allow 10251/tcp

sudo ufw allow 10255/tcp

sudo ufw reload

Turn swap off (required for Kubelet to work)

sudo swapoff –a

Enable Kubelet service on both nodes:

sudo systemctl enable kubelet

Step 3. Deploy the Kubernetes cluster

Initialise cluster

On the master node, execute the following command to initialise the Kubernetes cluster:

sudo kubeadm init

The process can take a few minutes. The last few lines of your output should look similar to this:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 160.119.248.60:6443 --token l5hlte.jvsh7jdrp278lqlr \

--discovery-token-ca-cert-hash sha256:07a3716ea4082fe158dce5943c7152df332376b39ea5e470e52664a54644e00a

Copy the kubeadm join from the end of the above output. We will be using this command to add worker nodes to our cluster.

If you forgot to copy, or misplaced the command, don’t worry; you can get it back by executing this command: sudo kubeadm token create –print-join-command

Create and claim directory

As indicated by the output above, we need to create a directory and claim its ownership to start managing our cluster.

Run the following commands:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Deploy pod network to cluster

We will use Flannel to deploy a pod network to our cluster:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

You should see the following output after running the above command:

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

You should be able to verify that your master node is ready now:

sudo kubectl get nodes

Output NAME STATUS ROLES AGE VERSION master-node Ready control-plane,master 90s v1.23.3

…and that all the pods are up and running:

sudo kubectl get pods --all-namespaces

Output NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-64897985d-5r6zx 0/1 Running 0 22m kube-system coredns-64897985d-zplbs 0/1 Running 0 22m kube-system etcd-master-node 1/1 Running 0 22m kube-system kube-apiserver-master-node 1/1 Running 0 22m kube-system kube-controller-manager-master-node 1/1 Running 0 22m kube-system kube-flannel-ds-brncs 0/1 Running 0 22m kube-system kube-flannel-ds-vwjgc 0/1 Running 0 22m kube-system kube-proxy-bvstw 1/1 Running 0 22m kube-system kube-proxy-dnzmw 1/1 Running 0 20m kube-system kube-scheduler-master-node 1/1 Running 0 22m

Add nodes

At this point, we are ready to add nodes to our cluster.

Copy the kubeadm join command from Step 1 and run it on the worker node:

kubeadm join 160.119.248.60:6443 --token l5hlte.jvsh7jdrp278lqlr \

--discovery-token-ca-cert-hash sha256:07a3716ea4082fe158dce5943c7152df332376b39ea5e470e52664a54644e00a

Output This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

To verify that the worker node indeed got added to the cluster, execute the following command on the master node:

kubectl get nodes

Output NAME STATUS ROLES AGE VERSION master-node Ready control-plane,master 3m40s v1.23.3 w-node1 Ready 83s v1.23.3

You can set the role for your worker node using:

sudo kubectl label node w-node1 node-role.kubernetes.io/worker=worker

Get nodes again to verify:

kubectl get nodes

Output NAME STATUS ROLES AGE VERSION master-node Ready control-plane,master 5m12s v1.23.3 w-node1 Ready worker 2m55s v1.23.3

To add more nodes, repeat this Add nodes step on more machines.

That’s it! Your two node Kubernetes cluster is up and running!